\\kill_chain is a piece for prepared cello, live electronics, and live video, composed for and in collaboration with Prof. Sonja Lena Schmid, as part of her research into Charlotte Moorman’s Bomb Cello. It was premiered by Sonja at the Westend in Tübingen on November, 23rd 2024 and recorded by SWR. The radio premiere was on June, 17th 2025. The premiered version had a duration of 28 minutes.

-> Recording

Background

While Charlotte Moorman staged Cold War anxieties through the symbolic presence of her bomb-strapped cello, kill_chain explores a more contemporary form of violence: systemic, remote, and emotionally abstract. My engagement with the bureaucracy of violence has long been shaped by the media landscape of my youth.

The release of the video Collateral Murder1 marked a foundational moment of political awareness. A few years later, public debates around drone strikes coordinated from U.S. command centres in Germany further shaped my understanding of contemporary warfare as something technologically advanced, spatially detached, and morally diffuse. (DiNapoli, 2019; ECCHR, 2024)

While these events did not directly trigger the composition of kill_chain, they remained latent references – informing the ethical vocabulary through which I later approached the topic. When I began the piece in summer of 2024, these memories resurfaced and aligned with contemporary discussions around artificial intelligence, remote control, and algorithmic decision-making in military contexts. Rather than dramatising violence, I sought to explore the abstract structures and procedures through which it is enacted.

Earlier in 2024 a visit to the Hello Robot exhibition at the Vitra Design Museum introduced me to Ruben Pater’s Drone Survival Guide2, a surreal mix of activism and tactical education.

Around the same time, discussions about artificial intelligence in warfare and a story about a German tank-tracking AI mistaking a picnic for a military target3 led me to see AI as the contemporary analogue of Moorman’s Cold War-era bombs. Through various reports, especially those concerning Israeli military use of AI, I became convinced that machine logic – disembodied and normalised through administrative processes – is today’s frontline technology of violence. The object of interest might have changed since the Cold War, but the sentiment of unease towards the possibility of remote controlled destruction stays the same.

The title kill_chain references the formalised military protocol that outlines the process of identifying, tracking, and eliminating targets. These steps – also called F2T2EA standing for find, fix, track, target, engage, assess – represent disturbingly the bureaucratic coldness of killing. This detachment resonates with my position as a privileged spectator, grappling with questions of moral implication and technological agency from home, from a safe space. Where Collateral Murder demands condemnation, the ethical calculus of AI-assisted warfare remains unresolved. What happens when identification becomes a task delegated to code? Is a quicker war a lesser evil? (Trumbull, 2023)

Moorman’s work fascinated me by revealing the tension between threat and fragility: weapons disguised as instruments. I see in kill_chain a historical continuation of her idea – a reconfiguration of its symbolic elements for the 21st century. This would not have been possible without the research of Prof. Sonja Lena Schmid, whose investigations into Moorman’s Bomb Cello and performance-based music encouraged me to embrace durational composition and conceptual rigour. Her openness enabled the integration of video, extended performance time, and to tackle a critical topic.

kill_chain also operates within broader networks of reference. It shares aesthetic and conceptual ground with Johannes Kreidler’s The Wires4 , ANTON*’s Hidden in Plain Sight5, the aesthetics of social media excess, harsh noise, hyperpop, and extreme virtuosity.

CONCEPT

kill_chain translates the cold formalism of algorithmic military operations – specifically AI-assisted target identification – into musical structure and sonic language. It takes as its central metaphor the „kill chain“ protocol used by modern armed forces where each step corresponds to formal and sonic strategies embedded in the composition:

- Find: The software estimates possible stable pitch centres in an auditory way.

- Fix: Once a centre is found, the system locks onto a pitch.

- Track: The locking stability is continuously monitored.

- Target/Engage/Assess: If a threshold condition is met, a „countermeasure“ is activated based on the timbre type.

A key structural decision was inspired by testimony from Israeli soldiers describing their experience using the Lavender AI system during operations in Gaza. One quote in particular:

I would invest 20 seconds for each target at this stage, and do dozens of them every day.

(MCKERNAN, 2024)

This quote led to the formal constraint that each musical module performed by the cello must remain under 30 seconds. Structurally, kill_chain consists of a seemingly endless number of repetitions following an A-B form: A – the cello performs a physical musical gesture; B – a machine voice recites a new fragment of military testimony.

This endless procedural structure reflects the madness found in the quote of another soldiers:

You have another 36,000 waiting.[…]You immediately move on to the next target. Because of the system, the targets never end.

(MCKERNAN, 2024)

This informed as well the form and the use of the visual system:

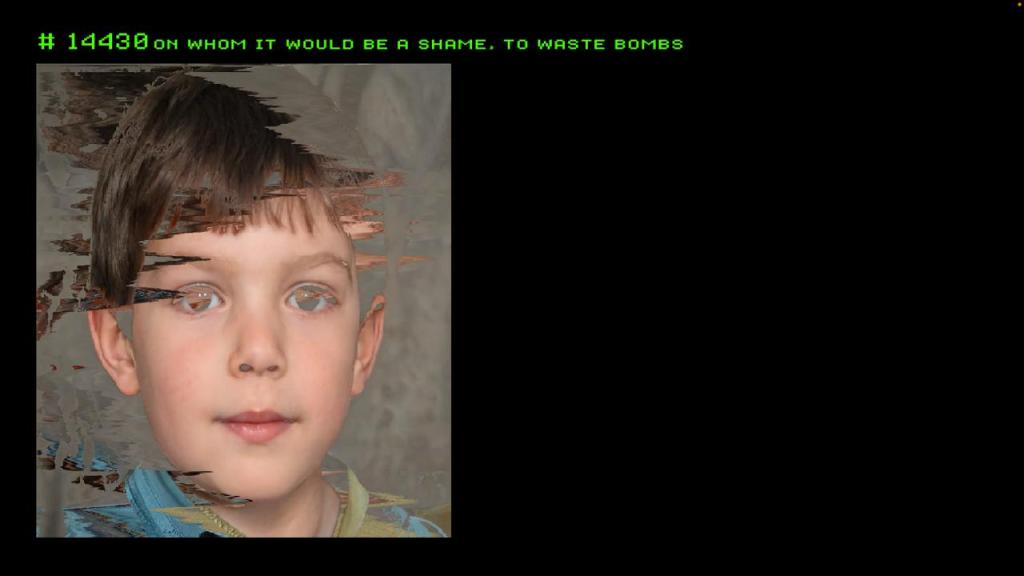

every note played on the cello is counted and illustrated with a photorealistic face generated by ThisPersonDoesNotExist6 .

Initially the idea was to depict 36,000 faces – mirroring number of targets mentioned in reports – but due to physical and temporal limits, the final count reached 19,750 in the premiere performance. The density of visual and sonic repetition almost certainly evokes moments of numbness in the audience, only to reveal small changes that recapture attention. The piece allows for multiple paths of perception: cognitive fatigue is not a bug but a compositional feature. The idea of allowing the audience to experience fatigue in one mode of perception and offering a different path of engagement is informed by IRMA (Interactive Real-time Measurement of Attention, (Pirchner, 2019) which showed that the underlying concept of a wandering attention in multimedia compositions follows certain basic rules. The main takeaway and application in kill_chain is the idea that the audience’s attention will (!) wander between sound, visuals and performer at all times but can be guided with any kind of perceptual accent towards one of the three sources of interest.

The video projection in this regard serves not only as an extension of the auditory logic but as a commentary on data overload and surveillance aesthetics. Thousands of faces – nonexistent but believable – blur past, mirroring the loss of individuality in bureaucratic violence. Each note becomes a datapoint, each face a statistic. The speed and repetition actively blur the line between empathy and exhaustion.7

The performer never merges with the machine, there is always resistance. Exhaustion and fragility become sonic expressions of ethical tension. The machine never sleeps. It watches the performer relentlessly.

Importantly, the piece does not offer a clear moral resolution. It presents the seductive neatness of algorithmic decisions alongside the monstrous potential of statistical killing. By showing faces, quoting justifications, and mimicking strike procedures, kill_chain draws attention to our desensitisation and questions our possible comfort of passive complicity.

The result is not a didactic critique but an unsettling witnessing. kill_chain is as much an aesthetic artefact as it is a procedural exposure – a sound-based inquiry into the mechanics of detached violence and the limits of empathy.

Realisation

All sound processing was realised in SuperCollider, while the video was played back and manipulated using Max/MSP/Jitter.

All human faces were scraped from thisPersonDoesNotExist.com via a Python script.

The dlib and face-recognition libraries were used to add the facial feature detection to the scraped faces.(King, 2009; Geitgey, 2025)

The timbre detection was realised using Rebecca Fiebrink’s Wekinator8 (Fiebrink, 2009) which was trained using the same contact mic setup as in concert to ensure uniformity of data.

The electronic structure of the piece is grounded in two reactive systems, each offering a distinct commentary on the aesthetics of automation, machinic perception, and the coldness of bureaucratic structures:

- Call and Response: The cello’s loudness is tracked and whenever a baseline threshold slightly above the background noise level of the microphone is crossed – indicating that the performer is resting – a line from the selected quotes and lyrics is spoken by an AI voice.9

- SonicDisruption: A scaled up version of the signal is used to determine peaks within the signal on the waveform level; these peaks are counted. (28) Once enough peaks were detected an array of Phase Locked Loops (PLLs) (29) is called to determine the pitch of the signal. eigengrau

- The threshold number of peaks is controlled by the sound director to allow a manual control over the density of tracking operations. Once enough instances of the PLLs have locked onto a pitch 10 , one of two reactions is triggered:

- Sinusoid Distortion Product: A sonic „attack“ with high-frequency sine clusters (13–15 kHz) using the phenomenon of oto-acoustic emissions11 (30) to induce a sound in the listener’s ear.

- Absolute Signal Cello Feedback: Playback of the transformed cello signal through a transducer that is attached to the bridge of the cello.

In parallel for each onset an array of 20 MFCCs12 are sent via OSC to Wekinator where a classifier model is trained for timbre recognition. For each of the timbres used in the cello part a different cello and interactive electronics parts another probabilistic process is running in the background. Every second a chance operation determines if a chord of detuned subharmonics is played or not. Each of the four speakers is assigned five oscillators, initialised with unique phase offsets. During performance, a random subset of oscillators (3 to 9) is activated to play subharmonics of the last stable PLL frequency. Each fsub is calculated with a given subdivision ratio r and a random detuning ∆detune in the range of +/- 1 semitone. These very low frequency interferences are used to evoke a psychoacoustic feeling of unease.13

The cello part is structured in self-similar 54 fragments around 10 base models that are derived from three basic gestalt principles:

- A series of very fast notes over an empty string drone.

- A double flageolet where one note is played as a trill.

- A free-form model that is connects flageolet chords

The majority of the models are interrelated, with models of a higher number being deducted from the ones with lower numbers. Originally I intended that the musician can arrange the order of the models. During rehearsals, however, Sonja suggested establishing a fixed order to facilitate and emphasise a coherent energetic and dramatic progression.

The video system reflects the mechanical logic of the audio systems. For every cello note detected, a new AI-generated face appears. 19,750 faces appear over 28 minutes – approximately 11 faces per second. During PLL tracking phases, synthetic overlays appear on top of the AI faces14 ; during disruption phases, pixel sorting corrupts the image.

eigengrau

The mechanistic brutality is opposed by human fragility which is rendered not through only through the delicacy of spheric flageolet sounds, but mainly through accumulated exhaustion. Extended passages of left-hand tapping as well as too little rests between modules might induce cramps increasing the challenge of maintaining full control over flageolet trills, bow, and percussive clarity.

The system proceeds steadily, its algorithms indifferent to strain, while the performer navigates the procedural demands with a body that remembers limits. kill_chain does not explain or resolve; it documents. In doing so, it gestures toward the metamodern condition as described by Zavarzadeh – one that neither collapses into irony nor clings to sincerity, but instead insists on witnessing, on enduring, and perhaps, on learning how to be „willing to live it“(McKernan, 2024), willing to live with what cannot be assimilated.

- The release of the video Collateral Murder by WikiLeaks in 2010 marked a pivotal moment in the public understanding of modern warfare and journalistic whistleblowing. Capturing a 2007 U.S. Apache helicopter strike in Baghdad that killed multiple individuals – including two Reuters journalists – the footage provoked global outrage for its stark portrayal of civilian casualties and the casual dialogue between soldiers during the attack. The video’s publication led to the imprisonment of whistleblower Chelsea Manning and catalysed ongoing legal actions against WikiLeaks founder Julian Assange. Despite confirming the video’s authenticity, the U.S. Department of Defense maintained the legality of the actions shown, triggering criticism from human rights organisations. ↩︎

- The Drone Survival Guide is a graphic design project developed by Dutch designer Ruben Pater in 2013. This guide serves as an educational tool, aiming to familiarize the public with the increasing presence of unmanned aerial vehicles (UAVs) in modern airspace.

It features silhouettes of 27 commonly used drones, drawn to scale, and provides information on their functions – whether for surveillance or armed operations.

Additionally, the guide offers strategies for evading drone detection, such as using reflective materials to confuse visual sensors or broadcasting on specific frequencies to disrupt communication links. (Pater,2013) ↩︎ - Although being told as a true story it turned out this was nothing more than an urban legend! ↩︎

- The Wires (2016) by Johannes Kreidler is a multimedia composition for cello, electronics, and video that investigates the theme of borders – both physical and metaphorical – through a juxtaposition of the associated violence of barbed wire and bullets with the tactile vulnerability of the instrument. The piece draws from U.S. barbed wire patents of the late 19th century and a catalogue of bullet types, using these depictions as sonic blueprints. ↩︎

- Hidden in Plain Sight (2017) by ANTON* is a multimedia composition for english horn, clarinet in b-flat, piano, guitar, violin, and sound director. The piece reflects on how repeated exposure to news of violence – such as terror attacks – leads to emotional numbness. It draws attention to the act of swiping away push notifications, turning a gesture of convenience into a metaphor for collective detachment. Instead of dramatising violence, the work explores its ambient presence in everyday digital life. The title points to the paradox of visibility without perception. Influenced by Jacques Attali’s ideas, the piece invites listeners to hear what society often chooses not to see. ↩︎

- ThisPersonDoesNotExist.com generates AI-produced human portraits using GANs. Created by Philip Wang in 2019 using NVIDIA’s StyleGAN model, each face is fictional yet eerily lifelike (Wang, 2019) ↩︎

- In a Žižekian way: we have to sustain the tension, the crisis, until it tears open something new! ↩︎

- Wekinator is an open-source, real-time interactive machine learning system developed by Rebecca Fiebrink. It allows artists, musicians, and designers to train models that map any kind of input – audio, motion sensors, video data- to output controls like sound synthesis parameters, visuals, or robotics without writing code using the OSC protocol. ↩︎

- In this case the speaker is a standard AI voice from elevenlabs.io called „Roger“. This speaker is commonly used in voice overs of so called „sludge content“.

“Sludge content” refers to split-screen videos designed to maximize viewer engagement through overstimulation, often combining unrelated visual streams such as gameplay footage, soap operas, and „satisfying loops“. German commentators frame sludge content as a “distraction of distraction,” suggesting it reflects an exhausted cultural state of hypermediated consumption.(Baumgaertel, 2023) In this ecosystem, AI voiceovers—frequently layered over visual sludge—serve to heighten the disembodied, affectless quality of the experience, reinforcing the machinic flattening of narrative and emotional tone.(Bink, 2023) ↩︎ - „Locked on“ is defined as: the standard deviation of the normalised frequencies of all currently active PLLs during the last 50 detected note onsets of the cello voice is smaller than 0.1. ↩︎

- Otoacoustic emissions are sounds generated by the ear itself.[99] These are audible phenomena that occur either spontaneously or—more commonly—are elicited through acoustic stimulation of the ear. In general, three distinct types of emissions are distinguished:

1. transient-evoked otoacoustic emissions (TEOAE)

2. stimulus-frequency otoacoustic emissions (SFOAE)

3. distortion-product otoacoustic emissions (DPOAE)

In the case of distortion-product otoacoustic emissions (DPOAEs), these are nonlinear distortions generated by the cochlea.(Kemp, 1978) For nearly two decades, they have constituted an important method for the non-invasive assessment of auditory function and are therefore primarily used in diagnostic contexts.(Abdala et al, 2001) Although they were theoretically described as early as 1948,(Hall, 2000) they were not empirically demonstrated until 1978 by David T. Kemp. Without being able to describe the phenomenon in physical or biological terms, Maryanne Amacher had already published a work in 1977 that addressed these emissions, during her work on electroacoustic compositions.(Amacher, 2002) Amacher’s work primarily engages with a phenomenon that would later be described by Kendall et al. as quadratic distortion products (qDp).(Kendall, 2014) The qDp is identical to the difference tones described by George Andreas Sorge as early as the 18th century,(Kroesbergen, 2020) which, however, were not applied compositionally but were mainly used for tuning musical instruments. In the case where frequency f1 < f2, the frequency of the qDp is calculated as follows:

fqDp = f2 − f1

↩︎ - In total 21 MFCCs are generated, the first one is dropped. ↩︎

- Very low frequency interferences – especially in the infrasonic range or as slow amplitude beatings – have been shown to induce perceptual states of unease, tension, or physiological discomfort in certain conditions.(Leventhal, 2003; Ballas, 1993) ↩︎

- For practical reasons I generated two videos – one without and one with facial feature overlay – that were played back in parallel – with only the one being displayed on screen that corresponded to the current state of the tracking algorithm. ↩︎

ABDALA, Caroline ; VISSER-DUMONT, Leslie: Distortion Product Otoacoustic Emissions: A Tool for Hearing Assessment and Scientific Study. In: The Volta review 103 (2001), Nr. 4, 281–302. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3614374/

AMACHER, Maryanne: Psychoacoustic Phenomena in Musical Composition: Some Features of a Perceptual Geography. In: Arcana III: Musicians on music. New York : Hips Road ; Distributed by Distributed Art Publishers, 2008, S. 16–25

BALLAS, James A.: Common factors in the identification of an assortment of brief everyday sounds. In: Journal of Experimental Psychology: Human Perception and Performance 19 (1993), Nr. 2, S. 250–267. http://dx.doi.org/10.1037/0096-1523.19.2.250

BAUMGAERTEL, Tilman: Sludge Content: Zerstreuung der Zerstreuung. https://www. zeit.de/kultur/2023-06/sludge-content-tiktok-video-split-screen, Version: 2023

BINK, Addy: What are sludge videos on TikTok? Are they harmful? https://ktla. com/entertainment/what-are-sludge-videos-on-tiktok-are-they-harmful/. Version: 2023

DINAPOLI, Emma: German Courts Weigh Legal Responsibility for U.S. Drone Strikes. In: Lawfare (2019). https://www.lawfaremedia.org/article/ german-courts-weigh-legal-responsibility-us-drone-strikes

EUROPEAN CENTER FOR CONSTITUTIONAL AND HUMAN RIGHTS: Ramstein at court: Germany’s role in US drone strikes in Yemen. https://www.ecchr.eu/en/case/ important-judgment-germany-obliged-to-scrutinize-us-drone-strikes-via-ramstein/, Version: 2024

FIEBRINK, Rebecca ; TRUEMAN, Dan ; COOK, Perry R.: A Meta-Instrument for Interactive, On-the-Fly Machine Learning. In: Proceedings of the International Conference on New Interfaces for Musical Expression (NIME). Pittsburgh, PA, USA, 2009, 280–285

GEITGEY, Adam: face-recognition: Recognize and manipulate faces from Python or from the command line. https://pypi.org/project/face-recognition/eigengrau

HALL, James W.: Handbook of otoacoustic emissions. San Diego : Singular Publ. Group, 2000

KEMP, D. T.: Stimulated acoustic emissions from within the human auditory system. In: The Journal of the Acoustical Society of America 64 (1978), November, Nr. 5, 1386–1391. http://dx.doi.org/10.1121/1.382104

KENDALL, Gary S. ; HAWORTH, Christopher ; CÁDIZ, Rodrigo F.: Sound Synthesis with Auditory Distortion Products. In: Computer Music Journal 38 (2014), Dezember, Nr. 4, 5–23. http://www.mitpressjournals.org/doi/10.1162/COMJ_a_00265

KING, Davis E.: Dlib-ml: A Machine Learning Toolkit. In: Journal of Machine Learning Research 10 (2009)

KROESBERGEN, Willem ; CRUICKSHANK, Andrew J.: 18th Century Quotations Relating to J.S. Bach’s Temperament. https://www.academia.edu/5210832/18th_Century_ Quotations_Relating_to_J.S._Bach_s_Temperament,2020-07-02

LEVENTHALL, Geoffrey: A Review of Published Research on Low Frequency Noise and its Effects / Department for Environment, Food and Rural Affairs (DEFRA), UK. Version:2003. https://webarchive.nationalarchives.gov.uk/ ukgwa/20130402151656/http://archive.defra.gov.uk/environment/ quality/noise/research/lowfrequency/documents/lowfreqnoise.pdf

MCKERNAN, Bethan ; DAVIES, Harry: ‘The machine did it coldly’: Israel used AI to identify 37,000 Hamas targets. In: The Guardian (2024), 4. https://www.theguardian.com/world/2024/apr/03/israel-gaza-ai-database-hamas-airstrikes

PATER, Ruben: Drone Survival Guide. https://www.dronesurvivalguide.org/

Version: 2013. – Exhibited at Vitra Design Museum, Weil am Rhein, during “Hello, Robot. Design between Human and Machine”

PIRCHNER, Andreas: IRMA (Interactive Real-time Measurement of Attention). A method for the investigation of audiovisual computer music performances. In: Proceedings of the 2019 International Computer Music Conference (ICMC). New York, USA : International Computer Music Association, 2019

TRUMBULL, Charles: Collateral Damage and Innocent Bystanders in War. In: Lieber Institute – Articles of War (2023)

https://lieber.westpoint.edu/ collateral-damage-innocent-bystanders-war/

WANG, Philip: This Person Does Not Exist. https://designmuseum.org/exhibitions/ beazley-designs-of-the-year/digital-2019/this-person-does-not-exist. Version: 2019